Sunday April 26th

The Horror and Hegemony

I gave a talk at CMU’s Symposium on AI for Good

- I was supposed to present a poster at CMU, but the event went virtual, so I was told I’d have 5 minutes to give a talk. These are my slides (bottom of post).

- Basically, it was one of the most horrifying moments ever for me because I realized there were between 60 and 90 people there, and notifications were going off in the chat, and I couldn’t see the right hand side of my screen and the clock was ticking. But it was so relieving, I was thankful for the opportunity and heard from persons afterwards who wanted to keep in touch with me about the progress of the project.

- What I got out of it was to be able to speak about a project I’m working on, to hear what other people are working on, and that was a thrilling process and I want to do that over and over.

I got into the project by accident

- I was searching for a project in class, and found a friend and classmate who was interested in working on a project in conjunction with the Economics department. Something about that was compelling to me. Maybe I just wanted to get away from the ego of Computer Science. Sometimes I feel like half of my mind is very passionate about Computer Science, programming, and tech, and the other part of me is ground down by the arrogance and ego that is associated with the culture in that field. And that part just wants a break.

- I found a cool spot on campus in that Department after I met with the professor, and I never really returned to hanging out in my Department as much again. I took naps in that spot, ate lunch and would listen to the professors greet each other in the morning. I became familiar with the office dog of the floor, and thumbed through free books left on shelves.

The work

- It’s on work we’re doing on implicit bias, in a way that might be helpful to the city. But we’re trying to do it in a way that is sensitive to the collection of data, privacy, the history of conflict of the town, issues like trust and transparency and with the knowledge that there are things that data (which is a proxy) can’t properly communicate.

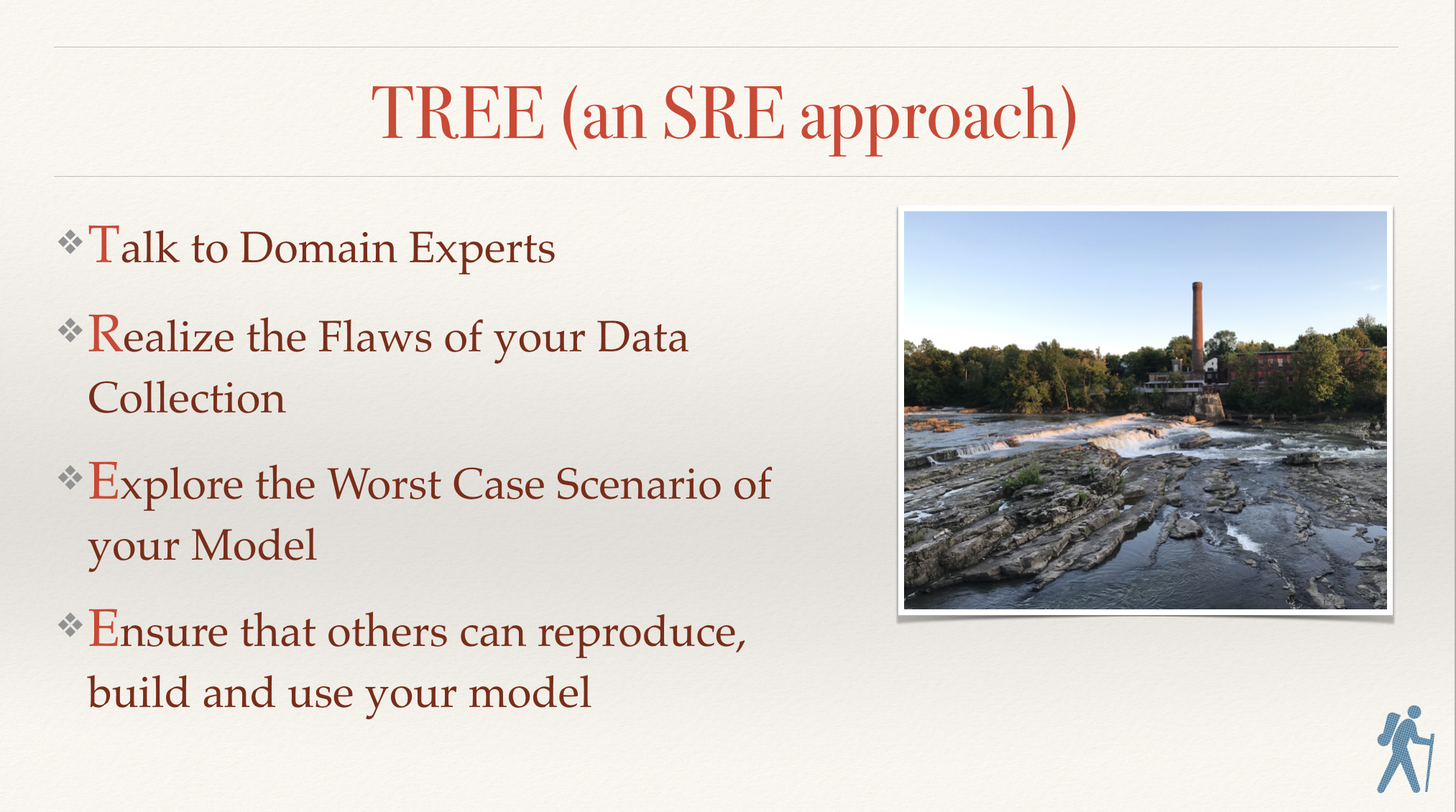

- It had me thinking about the way the process of an SRE is to provide a robust model that improves over time, and that helps developers; you are there to faciliate them. It’s an interesting correlation to how we can think of data collection and data modelling, because the goal is to build a robust, automatable model (which is similar to what Machine Learning models aim to be) that is a system that responds well to failures and facilitates those who use the tools around it, as well as being attentive to a feedback loop from those who use it.

- Having spent some time archiving work (yes; newspaper clippings in giant file cabinets, as well as dv cassettes before I attended Undergraduate study), I think that the Science community (I’m particularly looking at you who build fancy tools on github!) can do more to help make these data collection and archival systems better. It’s the least we can do. We trivialize data collection, and regard it as a bit of a burden. We treat it as low-paid labour.

- I am not the biggest fan of downloading data from the internet and just using that. I’m highly sensitive to the idea that data is a proxy and a codified choice, yet there are so many fields that use it as a way of validating accuracy for a specific point of view. Coming from a film background, where we engaged in critical theory, and discussed that a camera, even with the promise of objectivity, is not, it’s easy for me to have issues with the concept of “data as truth”.

- I’m also a huge fan of coming back to the source of data, and iterating through the process. I really have a silent disdain for a one-all collection of data, to be used as “the model”. It’s frustrating to me, in ways I hint about later in this post. But broadly, it’s the same way historically colonialist countries made assessments about the region in which I grew up; make a trip, come to a conclusion, leave. These methods then perpetuated themselves into the materials that they disseminated into our culture (as we were under their control), and led to several decades of our citizens trying to figure out our own independent identities and historical truths from the half-truths and data points presented to us through textbooks they recommended for our “education”.

- How infantilized was our identity before we gained our Independence? I thought about this while growing up, comparing how some books would claim our national heroes were distrupters and troublemakers, while locally we celebrated their valiance and their vision for a country where we could have our own freedom.

- In short, you can see how I find things like “history”, “data” and “education” to be fundamentally problematic when presumed to be fundamentally representative of some holistic “truth”.

It’s a highly relevant topic

- Machine Learning is very data-centric. There are wonderful individuals dealing specifically with these challenges, and I was very pleased today to hear from some of them in keynotes during ICLR’s Trust and Privacy track today.

It’s also relevant

- I am particularly sensitive to this topic not just because of my film background (where we spoke about topics like hegemony and objectification in recorded images), but also because they cover issues of consent and privacy (privacy, being my focus for grad school), and also because I am from a country with a rich history of grappling with the ideas and memory of postcolonialism. I see myself as a young researcher who is idealistic and optimistic and passionate about Machine Learning and Privacy, but some of the Academy assumptions sicken and exhaust me. And I’m frustrated because ego in a field makes things difficult to explain..until we hurt people. Or they revolt. I joked that we should have a Hegemonic Index for measuring this relationship in data. Maybe it’s not that funny of a joke and it’s worth considering.

- I remember the work of Jose Clemente Orozco and Diego Rivera; they had so many things to say about the interaction of technology and humans and class structures. Why aren’t we re-exploring these points of view? Why aren’t we deconstructing what we assume to be true? Aren’t we scientists, after all? Isn’t it our job to be curious, seek out truth and be skeptical of things that present themselves as easy, lazy answers to questions?

Anyways…

- My researcher friend at UK Uni recommended some books. I’ve bought them. She is studying Bio stuff, AI and she paints, as well as collaborates on research dealing with Privacy and Ethics.

- One is “The Ethical Algorithm” by Kearns and Roth and the other is “Privacy: A short history” by Vincent. If any of this resonates with you, I’d definitely recommend both!

Oh yeah, my slides

And that’s it

Written on April 26, 2020